The Open-Source Alternative to OpenAI Deep Researcher: Ollama Deep Research

Tired of relying on proprietary AI tools for research? Ollama Deep Research is an open-source, local alternative that offers privacy, flexibility, and cost efficiency. This guide explores what it is, how it works, and why it outperforms tools like OpenAI Deep Researcher and Google’s Deep Research.

Before diving into Ollama Deep Research, here’s a quick tip for developers: If you’re looking for an all-in-one platform to design, test, debug, and monitor APIs, check out Apidog. Apidog simplifies the entire API lifecycle, making it the perfect companion for tools like Ollama. Whether you’re building, debugging, or scaling your API workflows, Apidog has you covered. Now, let’s explore how Ollama Deep Research can revolutionize your research process!

What is Ollama Deep Research?

Ollama Deep Research is a local web research and report-writing assistant that automates searching, summarizing, and refining information. It uses locally hosted large language models (LLMs) to:

✅ Generate search queries based on your topic

✅ Retrieve relevant sources from the web

✅ Summarize information into a structured markdown report

✅ Identify knowledge gaps through iterative research cycles

Ideal for researchers, students, and professionals, Ollama ensures privacy by keeping all data local while delivering high-quality research summaries.

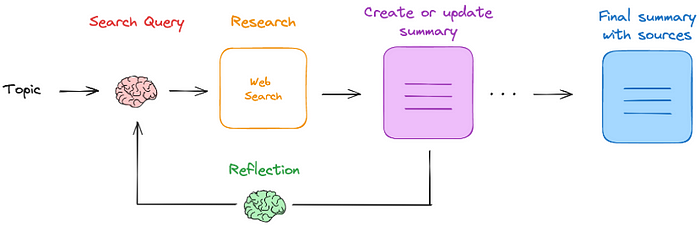

How Does Ollama Deep Research Work?

Ollama follows a structured, automated research process:

1. Input Your Topic

Enter your research question or subject.

2. Generate Search Queries

A locally hosted LLM formulates relevant web search queries.

3. Retrieve Sources

Ollama uses search APIs like DuckDuckGo, Tavily, or Perplexity to find the most relevant content.

4. Summarize Key Insights

The LLM extracts and compiles essential points from retrieved sources.

5. Identify Knowledge Gaps

Ollama analyzes the summary to find missing information.

6. Refine & Iterate

The tool performs additional searches and updates the summary until it reaches completeness.

7. Generate a Final Report

A detailed markdown document is created, including citations and sources.

This automated, iterative process ensures a well-researched, structured, and comprehensive output.

This iterative approach ensures a comprehensive and well-structured research output while keeping your data private.

How to Use Ollama Deep Research: A Step-by-Step Guide

Getting started with Ollama Deep Research is straightforward. Follow these steps to set up your environment, configure your search engine, and start generating comprehensive research reports.

Step 1: Set Up Your Environment

1. Download Ollama:

- Visit the official Ollama website and download the latest version for your operating system (Windows, macOS, or Linux).

2. Pull a Local LLM:

- Use the following command to download a local large language model (LLM) like DeepSeek:

ollama pull deepseek-r1:8b3. Clone the Repository:

- Clone the Ollama Deep Research repository using Git:

git clone https://github.com/ollama-deep-research4. Create a Virtual Environment (Recommended):

- For Mac/Linux:

python3 -m venv venv source venv/bin/activate- For Windows:

python -m venv venv venv\Scripts\activateStep 2: Configure Your Search Engine

- Default Search Engine:

- Ollama uses DuckDuckGo by default, which doesn’t require an API key.

2. Alternative Search Engines:

- To use Tavily or Perplexity, add their API keys to your

.envfile:

SEARCH_API=tavily # or perplexity

TAVILY_API_KEY=your_api_key_here # or PERPLEXITY_API_KEYStep 3: Launch the Assistant

1. Install Dependencies:

- Install the required Python packages:

pip install -r requirements.txt2. Start the LangGraph Server:

- Launch the server with:

python -m langgraph.server3. Access LangGraph Studio:

- Open the provided URL (e.g.,

http://127.0.0.1:2024) in your browser to access the LangGraph Studio Web UI.

4. Configure Settings:

- Select your preferred search engine (DuckDuckGo, Tavily, or Perplexity).

- Set the name of your local LLM (e.g.,

deepseek-r1:8b). - Adjust the depth of research iterations (default is 3).

Step 4: Input Your Query

1. Enter Your Topic:

- Type your research topic or question into the LangGraph Studio interface.

2. Generate Report:

- Ollama will create a detailed markdown report based on your input, using the selected search engine and LLM.

Why This Setup Works

This step-by-step process ensures you can leverage Ollama Deep Research for efficient, private, and customizable research. By running locally, you maintain full control over your data while benefiting from powerful search and summarization capabilities. Whether you’re a student, professional, or hobbyist, Ollama Deep Research makes web research faster and more reliable.

Why Choose Ollama Deep Research?

Ollama stands out from proprietary tools like OpenAI Deep Researcher and Google’s Deep Research for several reasons:

1. Privacy and Control:

- Ollama runs locally, so your data never leaves your machine.

- Unlike OpenAI, which sends data to external servers, Ollama keeps everything in-house.

2. Cost Efficiency:

- Ollama is open-source and free (aside from hardware costs).

- Proprietary tools like OpenAI Deep Researcher require expensive subscriptions or API fees.

3. Customization:

- Use any local LLM (e.g., LLaMA-2, DeepSeek) or fine-tune models for specific needs.

- Proprietary tools offer limited customization and rely on fixed models.

Key Features of Ollama Deep Research

- Local Model Support: Use any locally hosted LLM for flexibility and performance.

- Iterative Search and Summarization: Multiple cycles ensure thorough coverage and knowledge gap identification.

- Markdown Report Generation: Easy-to-read reports with all sources cited.

- Privacy-Preserving: Only search queries are sent externally, and you can use privacy-focused engines like DuckDuckGo.

Pricing

Ollama Deep Research is free and open-source. The only costs are hardware-related (e.g., electricity and maintenance). Compare this to:

- OpenAI Deep Researcher: Requires expensive subscriptions or API fees.

- Google’s Deep Research: Included in Google One Premium ($20/month), but still less cost-effective than Ollama.

Conclusion

Ollama Deep Research is a powerful, open-source alternative to proprietary tools like OpenAI Deep Researcher. It offers unmatched privacy, customization, and cost efficiency, making it ideal for researchers who value control over their data and process. Whether you’re a student, professional, or curious learner, Ollama Deep Research provides the tools you need to dive deep into any topic.