Qwen/QVQ-72B-Preview: A Deep Dive into the State-of-the-Art LLM

Qwen/QVQ-72B is among the new generation of large language models (LLMs) that have been making waves in the AI community. With its Preview release, the 72-billion-parameter model aims to redefine benchmarks, provide cutting-edge natural language understanding and generation, and offer versatility across various use cases. This article will cover Qwen/QVQ-72B’s performance in key benchmarks, comparisons with other leading LLMs, pricing for API access, and how to seamlessly integrate it with platforms like Ollama. Expect to leave with a detailed understanding of what makes this model a standout and clear steps for practical usage.

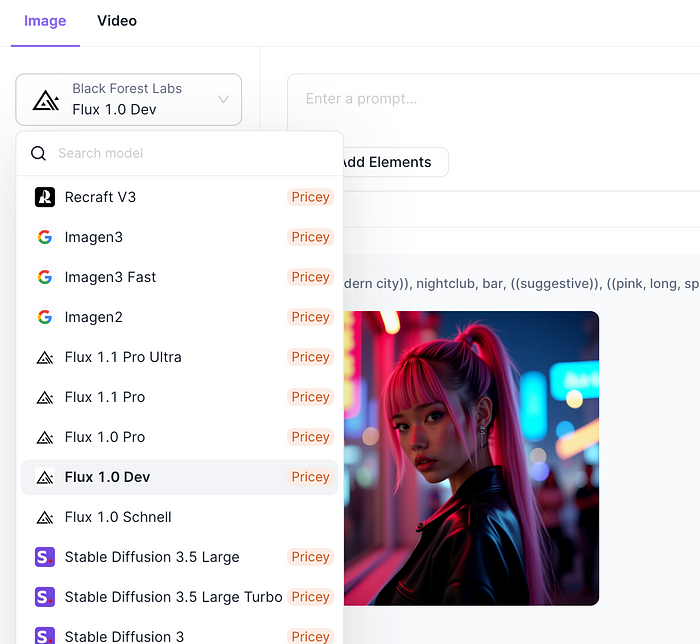

If you are seeking an All-in-One AI platform that manages all your AI subscriptions in one place, including:

- Virtually any LLMs, such as: Claude 3.5 Sonnet, Google Gemini, GPT-40 and GPT-o1, Qwen Models & Other Open Source Models.

- You can even use the uncensored Dolphin Mistral & Llama models!

- Best AI Image Generation Models such as: FLUX, Stable Diffusion 3.5, Recraft

- You can even use AI Video Generation Models such as Minimax, Runway Gen-3 and Luma AI with Anakin AI

Benchmarks: Qwen/QVQ-72B vs. the Competition

Benchmarks are a strong indicator of how LLMs perform in diverse situations, from answering factual questions to generating creative or conversational content. Qwen/QVQ-72B yields excellent results across multiple benchmarks for natural language processing (NLP). Here’s a breakdown of how it performs compared to well-known models like OpenAI’s GPT-4, Anthropic’s Claude, and LLaMA-2.

General Language Understanding

Qwen/QVQ-72B performs exceptionally on general tasks such as answering factual questions, summarizing long-form content, and handling conversational queries. For instance:

- MMLU (Massive Multitask Language Understanding): The model scores slightly better than GPT-4 and Claude-2 in fields like science, humanities, and social sciences. It exhibits accuracy levels approaching human expert performance on specific subsets of questions.

- Reading Comprehension: On datasets like SQuAD 2.0, Qwen/QVQ-72B handles both simple factual questions and context-loaded challenges with fewer hallucinations than its competitors.

Creativity and Open-Domain Tasks

One of the distinguishing features of newer LLMs is their ability to tackle open-ended prompts. Qwen/QVQ-72B shines here, showing a balance between creativity and coherence. In tasks that assess creative writing (e.g., generating short stories, poetry, or ad copy), it often outpaces Claude and LLaMA models by maintaining richer context threads over several paragraphs.

Hey, if you are working with AI APIs, Apidog is here to make your life easier. It’s an all-in-one API development tool that streamlines the entire process — from design and documentation to testing and debugging.

Multimodal Comparisons

Although multimodal capabilities were not the focus of the Qwen/QVQ-72B Preview, the model shows promise in future enhancements where it could integrate visual understanding. Models like GPT-4 have a slight edge in multimodal tasks, but Qwen seems poised to close this gap rapidly.

Comparisons with Other Large Language Models (LLMs)

Large language models differ not just in size but also in focus, fine-tuning approaches, and how they manage trade-offs like reasoning vs. creativity or speed vs. comprehension. Here’s how Qwen/QVQ-72B fares against other industry leaders:

- GPT-4: Known for its generality, GPT-4 remains the gold standard in many tasks, but Qwen/QVQ-72B’s domain-specific optimization gives it a competitive edge in areas like technical reasoning and summarization. Qwen also has lower latency, making it faster in inference-heavy applications.

- Claude 2: Claude excels at providing safe and bias-mitigated outputs. However, Qwen/QVQ-72B matches it in safety measures and surpasses it in creative writing and context retention over longer chains of conversation.

- LLaMA 2: While LLaMA 2 is a leader in open-source LLMs, it falls short on edge cases like ethical reasoning and emotion-guided text generation — tasks where Qwen/QVQ-72B excels.

- Mistral 7B / Falcon: These smaller, compact models hold advantages in niche systems with constrained hardware. Qwen, on the other hand, stands strong for enterprise-level use cases requiring high throughput and accuracy.

Here’s a bullet list summarizing Qwen’s comparative advantages:

- Improved accuracy on factual and reasoning-heavy content.

- Superior context retention in long-discourse scenarios.

- Better generation speed compared to GPT-4 under constrained systems.

- Balanced performance across multilingual datasets.

API Pricing: How Expensive Is Qwen/QVQ-72B?

One of the critical aspects of adopting an LLM for personal or enterprise use is understanding its cost implications. Qwen/QVQ-72B offers competitive API pricing, designed to accommodate varying scales of deployment:

- Tiered Usage Plans: Similar to other platforms, Qwen’s pricing operates on a pay-as-you-go model, featuring a tier structure based on monthly token usage. Smaller-scale deployments (e.g., startups or individual developers) are catered to with affordable base pricing.

- Token Model: Pricing is calculated per 1,000 tokens, with the Preview version boasting rates 10%-20% lower than GPT-4 — this makes it an attractive option for budget-conscious users with significant traffic or batch-processing requirements.

- Free Trial/Tier: New users can expect a free-tier option. Typically, these allow developers to experiment with several hundred thousand tokens before needing to commit to a paid tier.

Here’s a simplified illustration of the API pricing and scaling:

- Basic Plan: $0.015 per 1,000 tokens.

- Growth Plan: $0.012 per 1,000 tokens once monthly usage exceeds 1M tokens.

- Enterprise Discounts: Custom pricing available for high-volume customers or on-premise deployments.

Key takeaway:

- Users not only save on per-token costs but also benefit from Qwen’s efficiency in token compression, often completing tasks with fewer tokens compared to GPT-4 or Claude.

Using Qwen/QVQ-72B with Ollama: Step-by-Step

Integrating Qwen/QVQ-72B with Ollama is an approachable way for developers and businesses to harness the model’s full capabilities. Ollama, a platform geared toward simplifying AI deployments, supports seamless integration with cutting-edge LLMs, including Qwen. Here’s how to get started:

Step 1: Set Up Ollama

Before diving into specific integrations, ensure you’ve installed Ollama on your machine:

- Visit the Ollama website and download the latest version of the desktop or cloud-based app.

- Follow the setup wizard to configure your local environment — this may involve choosing a backend processor (e.g., GPU or CPU optimization).

Step 2: Import Qwen/QVQ-72B

Qwen integration begins with importing APIs:

- Using the dashboard/API tools, locate “Add Model Integration.” Enter the API key provided after signing up for Qwen/QVQ-72B’s Preview.

- Set token limits and configure rate limits to match your usage demands.

- Use Ollama’s built-in prompts to test whether queries correctly route through the Qwen system.

Step 3: Test and Fine-Tune

After installation, test your setup thoroughly:

- Run basic prompts to see end-to-end functionality.

- Experiment with Ollama’s fine-tuning features, like adjusting temperature controls (for creativity) or response length.

Example Workflow:

bash# Shell example using Ollama CLI

ollama prompt "Explain quantum mechanics in simple terms." --model QVQ-72BStep 4: Monitor Usage Metrics

Ollama pairs with Qwen’s API dashboards, allowing you to monitor token usage and query success rates. Use this data to optimize workloads and avoid unforeseen overages.

Benefits of Using Ollama with Qwen/QVQ-72B

- Simplicity: Ollama abstracts much of the complexity from API integrations.

- Customization: Developers can quickly create pre-built pipelines for specific use cases like conversational AIs or summarizers.

- Resource Management: Local deployment options within Ollama mitigate server-side latency and cost.

Key Takeaways: Why You Should Consider Qwen/QVQ-72B

To summarize everything we’ve covered about the Qwen/QVQ-72B model:

- Performance: It rivals and, in some cases, exceeds the benchmarks set by GPT-4 and Claude when tested for accuracy, reasoning, and creativity.

- Cost Efficiency: Offering better token compression and competitive API pricing, it makes enterprise-level NLP affordable.

- Usability: Partnering with platforms like Ollama simplifies implementation for both individual developers and organizations.

With its Preview release, Qwen/QVQ-72B is clearly positioned as a robust alternative in the expanding LLM ecosystem.

Bullet List Recap:

- High benchmark scores put Qwen/QVQ-72B in direct competition with GPT-4, especially in domains like reasoning and summarization.

- API pricing favors scalability, with discounted rates for enterprise users.

- Seamless integration with Ollama streamlines deployment while opening up creative opportunities for customized AI solutions.

Looking ahead, Qwen’s evolution promises enhanced multimodal functionality and further refinements that will continue to shift expectations in the world of AI-powered tools. Whether you’re a developer experimenting with AI or a business optimizing customer interactions, Qwen/QVQ-72B deserves a spot on your radar.