How I Connected Figma to My AI Coding Workflow with MCP

As a developer who frequently collaborates with designers, I’ve always struggled with the handoff process. Translating Figma designs into code often meant constant back-and-forth, taking screenshots, and manually measuring spacing and dimensions. That all changed when I discovered Figma’s MCP server integration.

Speaking of powerful integrations, I’ve also been experimenting with Apidog MCP Server alongside Figma MCP. While Figma MCP connects my design assets to AI tools, Apidog MCP Server does the same for API documentation. It lets AI coding assistants directly access and understand my API specifications from Apidog projects or OpenAPI files.

This has been game-changing for backend development tasks — I can simply ask the AI to “generate Java records for the Product schema” or “create MVC code for the /users endpoint” and it pulls the exact specifications directly from my documentation. The setup process is similarly straightforward with just Node.js and an Apidog access token. For teams working on both frontend and backend, these two MCP servers create a complete design-to-implementation pipeline that’s fully AI-assisted.

What Figma-MCP Solved for Me

The Figma Model Context Protocol (MCP) has fundamentally changed how I interact with design assets. Instead of the traditional design-to-code workflow, I can now have my AI coding assistant directly access and understand Figma designs through a standardized protocol.

For me, this means:

- No more manually copying color codes and measurements

- Direct access to component hierarchies and design systems

- The ability to generate code that accurately reflects the designer’s intent

Setting Up My Figma-MCP Environment

Getting this working in my development environment was surprisingly straightforward:

Prerequisites I Needed

- Node.js v16+ (I was already running v18)

- npm v7+ (or pnpm v8+, which I prefer for faster installs)

- My existing Figma Professional account

- A Figma API access token with read permissions

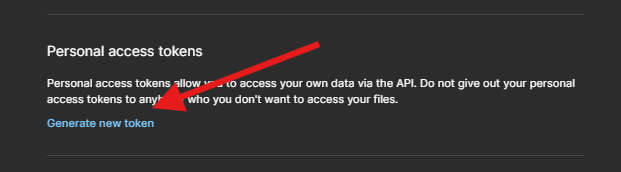

Step 1: Getting My Figma API Token

This part was critical. I needed to:

- Log into my Figma desktop app

- Click my profile icon in the sidebar

3. Navigate to Settings → Security

4. Scroll to Personal Access Tokens

5. Generate a new token named “Dev_MCP_Integration”

6. Copy and securely store the token (Figma only shows it once)

I stored mine as an environment variable to avoid hardcoding it anywhere:

set FIGMA_API_KEY=figd_my_token_valueStep 2: Installing the MCP Server

I opted for the quick NPM installation method:

npx @figma/mcp-server --figma-api-key=%FIGMA_API_KEY%The server started running on port 3333 by default. For my more complex projects, I’ve since cloned the repository locally for more customization options.

Step 3: Connecting to My AI Coding Tools

The real magic happened when I connected the Figma MCP server to Cursor (my preferred AI-enhanced IDE):

- I made sure my Figma-MCP server was running on port 3333

- In Cursor, I went to Settings → MCP and added a new server

- I named it “Figma Designs” and selected the SSE option

- I entered

http://localhost:3333as the URL - A green dot appeared, confirming the connection was successful

For my teammates using other AI tools, I shared this configuration snippet:

{

"mcpServers": {

"Figma Designs": {

"command": "npx",

"args": [

"@figma/mcp-server",

"--figma-api-key=YOUR_TOKEN_HERE"

]

}

}

}My Workflow in Action

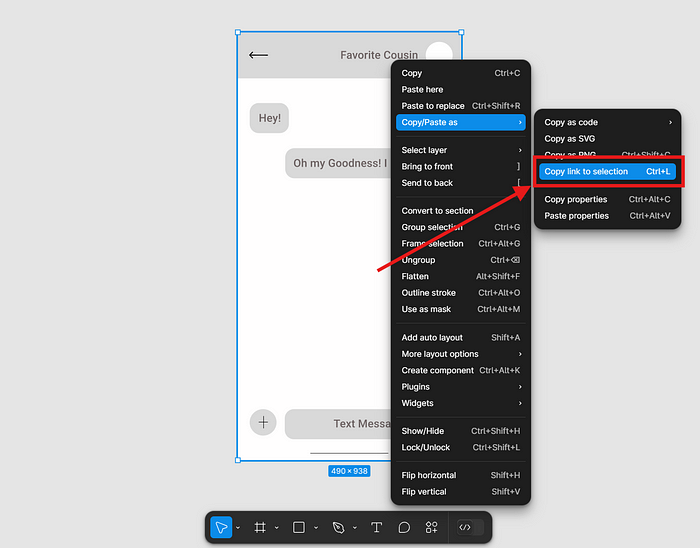

Now when I need to implement a design, my process looks like this:

- I ask the designer to share the Figma link to the specific component

- In Figma, I right-click the design and select “Copy/Paste As → Copy Link to Selection”

3. I open Cursor Composer with Agent Mode enabled

4. I paste the Figma link and ask something like: “Generate a React component for this design using Tailwind CSS”

The AI can now “see” the design through the MCP connection and generate accurate code that matches the visual design, component structure, and even design tokens.

Developer Tips I’ve Discovered

After using this setup for several weeks, I’ve found a few techniques particularly useful:

- Inspect MCP responses: Running

pnpm inspectin a separate terminal launches an inspector UI that shows exactly what data is being sent between Figma and the AI. This has been invaluable for debugging. - Use the get_node tool: When working with complex designs, I’ve found it more effective to have the AI focus on specific nodes rather than entire files:

"Implement just the navigation component from this Figma design"3. Batch operations: For larger projects, I’ve written scripts that use the MCP server to batch-process multiple components at once.

4. Version control: I keep my MCP configuration in version control (minus the API key) so new team members can quickly get set up.

The Real Impact on My Development Process

This integration has genuinely transformed how I work with designers. What used to take days of back-and-forth now happens in minutes. The AI accurately interprets spacing, typography, color schemes, and component hierarchies directly from Figma.

For example, last week I implemented an entire dashboard UI in React that perfectly matched our designer’s vision in about 2 hours — a task that would have previously taken 1–2 days of meticulous work.

As both AI tools and the MCP protocol continue to evolve, I expect this workflow to become even more powerful. For developers working closely with design teams, I can’t recommend this integration enough.