Breaking Down Barriers: My Journey Connecting AI Models Using Dolphin MCP

As a developer who’s spent countless nights debugging API connections, I’ve always been frustrated by the fragmentation in the AI model landscape. One week I’m using OpenAI for a project, the next I need Ollama’s local inference to reduce costs, and then I’m trying to integrate specialized tools for specific tasks. It was a mess of different APIs, authentication methods, and response formats — until I discovered the Model Context Protocol (MCP) and Dolphin MCP.

The Integration Headache That Led Me Here

Let me paint you a picture of my life before MCP: I was building a content analysis tool that needed to process large volumes of text. OpenAI’s models were powerful but expensive for high-volume processing, while running everything through Ollama locally created bottlenecks for complex reasoning tasks.

My codebase was a nightmare of conditional logic:

if task_requires_complex_reasoning:

# Call OpenAI with one set of parameters

response = openai_client.chat.completions.create(...)

elif task_is_simple_classification:

# Call Ollama with completely different parameters

response = requests.post("http://localhost:11434/api/generate", ...)

else:

# Call yet another service with its own unique format

# More conditional spaghetti code...Every new model or tool I wanted to add meant more if-else branches, more authentication handling, and more response parsing. I was spending more time on integration code than on actual features!

My “Aha!” Moment with MCP

I stumbled across Model Context Protocol while researching ways to standardize my AI interactions. The concept immediately clicked: a unified protocol that lets different AI models share context, exchange data, and call tools using a standardized approach.

It was like discovering that all my devices could suddenly use the same charger. The relief was palpable.

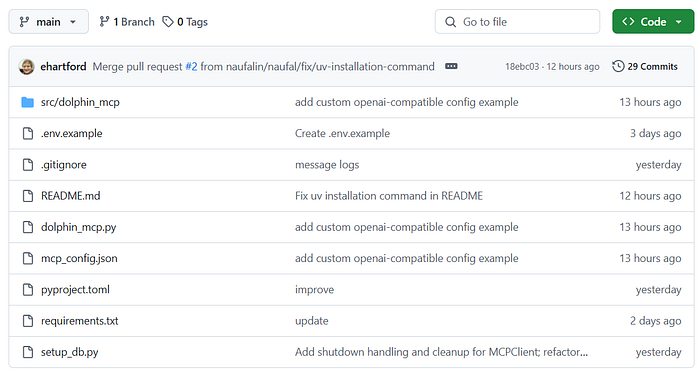

But the real game-changer came when I found Dolphin MCP — an open-source Python library that implements this protocol with minimal fuss. Within an hour of discovering it, I had replaced dozens of lines of custom integration code with a clean, consistent interface.

Setting Up My Multi-Model AI Workbench

Getting Dolphin MCP running on my Windows development machine was surprisingly straightforward. Here’s what worked for me:

1. First, I made sure my Python environment was ready:

python --version # Confirmed I had 3.8+2. I installed the package directly from PyPI:

pip install dolphin-mcp3. Created my .env file with my API keys:

OPENAI_API_KEY=my_actual_key_here

OPENAI_MODEL=gpt-4o4. Set up a basic mcp_config.json to define my server connections:

{

"mcpServers": {

"ollama-local": {

"command": "ollama serve",

"args": []

}

}

}The most time-consuming part was actually just finding my API keys across different services — the Dolphin MCP setup itself was surprisingly painless.

The First Test: A Multi-Model Query

With everything set up, I ran my first test using the CLI tool:

dolphin-mcp-cli "Analyze the sentiment of this customer feedback: 'Your product has completely transformed our workflow!'"The magic happened behind the scenes: Dolphin MCP routed my query to the appropriate model, handled the authentication, processed the response, and returned a clean result. No more worrying about which model to call or how to format the request!

For more complex scenarios, I switched to the Python library:

import asyncio

from dolphin_mcp import run_interaction

async def analyze_multiple_feedbacks(feedbacks):

results = []

for feedback in feedbacks:

result = await run_interaction(

user_query=f"Analyze sentiment: {feedback}",

model_name="gpt-4o" if len(feedback) > 100 else "ollama", # Use appropriate model based on complexity

quiet_mode=True

)

results.append(result)

return results

# This simple function saved me from writing 50+ lines of model-specific codeP.S. While setting up my testing environment, I found Apidog incredibly useful for debugging my MCP server endpoints. And it is FREE! You can try it out here.

Real-World Application: My Content Analysis Pipeline

With Dolphin MCP in my toolkit, I rebuilt my content analysis pipeline to leverage multiple models efficiently:

- Initial processing with Ollama running locally (fast and free)

- Complex reasoning tasks routed to OpenAI (when needed)

- Specialized analysis using custom tools connected via MCP

The code was clean, maintainable, and most importantly, extensible. When a new model or tool comes along, I can add it to my MCP ecosystem without touching the core application logic.

My favorite part? The dramatic reduction in code complexity. What used to be hundreds of lines of model-specific logic became a streamlined workflow focused on the actual task rather than the integration details.

The Developer Experience Improvements

Beyond the technical benefits, Dolphin MCP has transformed my development workflow in several ways:

- Faster prototyping: I can switch models with a simple parameter change, making it easy to experiment with different approaches.

- Cleaner codebase: No more model-specific branches cluttering my logic.

- Better error handling: Standardized error responses make debugging much simpler.

- Cost optimization: I can use expensive models only when necessary, falling back to local models for routine tasks.

- Future-proofing: As new models emerge, I can add them to my toolkit without rewriting application code.

The CLI tool has also become my go-to for quick tests and experiments. Instead of setting up separate environments for different models, I can test ideas across multiple providers with a single command.

Lessons Learned and Tips for Fellow Developers

If you’re considering implementing Dolphin MCP in your own projects, here are some tips from my experience:

- Start with the CLI tool to get comfortable with the workflow before diving into the Python library.

- Use environment variables for all your API keys and configuration settings — it makes switching between development and production environments much easier.

- Create model-specific profiles in your configuration for different types of tasks. I have profiles for “quick-analysis” (Ollama), “deep-reasoning” (OpenAI), and “specialized-tasks” (custom tools).

- Implement graceful fallbacks in your code. Even with MCP, models can sometimes fail, so having a fallback strategy is important.

- Contribute to the project! Dolphin MCP is open-source, and the community benefits from more developers sharing their use cases and improvements.

Conclusion: A New Paradigm for AI Development

As developers, we often get caught up in the hype around specific models or providers, but the real power comes from being able to combine different tools seamlessly. Dolphin MCP has fundamentally changed how I think about AI integration, shifting my focus from “which model should I use?” to “what am I trying to accomplish?”

If you’re building applications that leverage AI capabilities, I strongly recommend giving Dolphin MCP a try. It’s transformed my development workflow from a fragmented mess of different APIs into a cohesive, unified approach that’s both more powerful and easier to maintain.

The future of AI development isn’t about picking the right model — it’s about orchestrating multiple models and tools to create solutions greater than the sum of their parts. And tools like Dolphin MCP are making that future accessible to developers today.

Happy coding, and may your API integrations be forever simplified!